Raising Questions

Well, we're in month eleven now, and it doesn't get easier, does it? How are you coping? What's helping you get through your week? What do you need help with?

These essays have been helping me structure and track my experience of this time. I think of it as a kind of flood mitigation, allowing me to slow and channel the firehose of news as I collect, compare, and order impressions. My general method has been to anchor myself in a full-length book as I address one of my (many) knowledge gaps. Then, as I craft a response to share with you here, I may also set the book in conversation with related media, such as late-breaking commentary from journalists or expert analyses. It's both an intellectual and emotional task, as I grapple with giving a coherent shape to the things I've been engaged with each week.

As I want to keep emphasizing, I'm not an expert—I'm just a person out here trying to figure some things out. That's why I appreciate hearing from readers. Conversations can help us work on this together.

My goal is to better understand the events, beliefs, processes, and systems that have led us to our present moment, and get more clarity on a) what I'm seeing happen, b) how we might imagine more positive alternative scenarios, and c) what our actions toward realizing those scenarios might be. Learn, Imagine, Act.

I started the project with a long list of book titles. But in reality, I'm going by my gut a lot of the time. I frequently pivot to topics that feel particularly pressing to me in the face of new developments. I add new titles as I come across them in my research, and revisit earlier readings or authors as I feel a need. Admittedly, there are some books on my shelf that I'm not emotionally ready to take on. All in all, it's been an emergent process.

What's been especially emerging for me this week has to do with the topic of AI and its increasing presence in our daily lives. I've been bumping into lots of examples of AI-generated graphics and feel-good stories on social media. Concerning headlines are appearing in my feed, about the implications for public and private settings such as education, mental health, and personal use.

People seem to be struggling to have nuanced conversations about AI (I prefer to call it SCAI—"so-called artificial intelligence"), because we haven't yet learned precise language to capture what we think it is and does. I've heard people using words like "personality" and "companion," when referring to their chatbot "conversations." At a time when large-scale efforts to dehumanize other people are being waged, language use that humanizes machines in this way is deeply disturbing.

The UNESCO page on Artificial Intelligence and Emerging Technologies offers broad language in their definition: "The Recommendation interprets AI broadly as systems with the ability to process data in a way which resembles intelligent behaviour. This is crucial as the rapid pace of technological change would quickly render any fixed, narrow definition outdated, and make future-proof policies infeasible."

As I read it, rapidly changing data-processing systems is one important chunk of meaning, and the phrase "resembles intelligent behavior" is another. The word "resembles" is doing a lot of work here.

The site goes on to state a general stance:

"The rapid rise in artificial intelligence (AI) has created many opportunities globally, from facilitating healthcare diagnoses to enabling human connections through social media and creating labour efficiencies through automated tasks.

However, these rapid changes also raise profound ethical concerns. These arise from the potential AI systems have to embed biases, contribute to climate degradation, threaten human rights and more. Such risks associated with AI have already begun to compound on top of existing inequalities, resulting in further harm to already marginalised groups."

I concur that there are many beneficial applications of data-processing systems. The line about risks that "have already begun to compound on top of existing inequalities" sums up my main concern.

I'm currently on the hunt for well-researched, relevant, full-length books (recommendations, anyone?), so I'm not prepared to delve into it in detail here today. But I do want to pull together a collection of useful questions to ask of AI and of new technologies in general.

I'm sharing these questions and thoughts here because the subject relates to pretty much everything else I've been writing about in this series: sophisticated mechanisms for marketing, surveilling, and propagandizing are ever-more embedded in our technologies and metastasizing in our lives. Much of what we look at, especially online, has specific designs on us whether we recognize it or not.

We need to be wary of the myriad forms of social control that people with various motives are attempting to lace through our psyches and communal spaces. Because even if they aren't succeeding in their particular goals, they may be influencing the way we perceive our world. See my two discussions of the book, Invisible Rulers: The People Who Turn Lies into Reality:

We all possess finite amounts of energy and attention. Our first line of defense may be simply pausing to ask questions, in order to make more conscious decisions about how we expend and direct them.

Our Attention and the Impact of Technologies

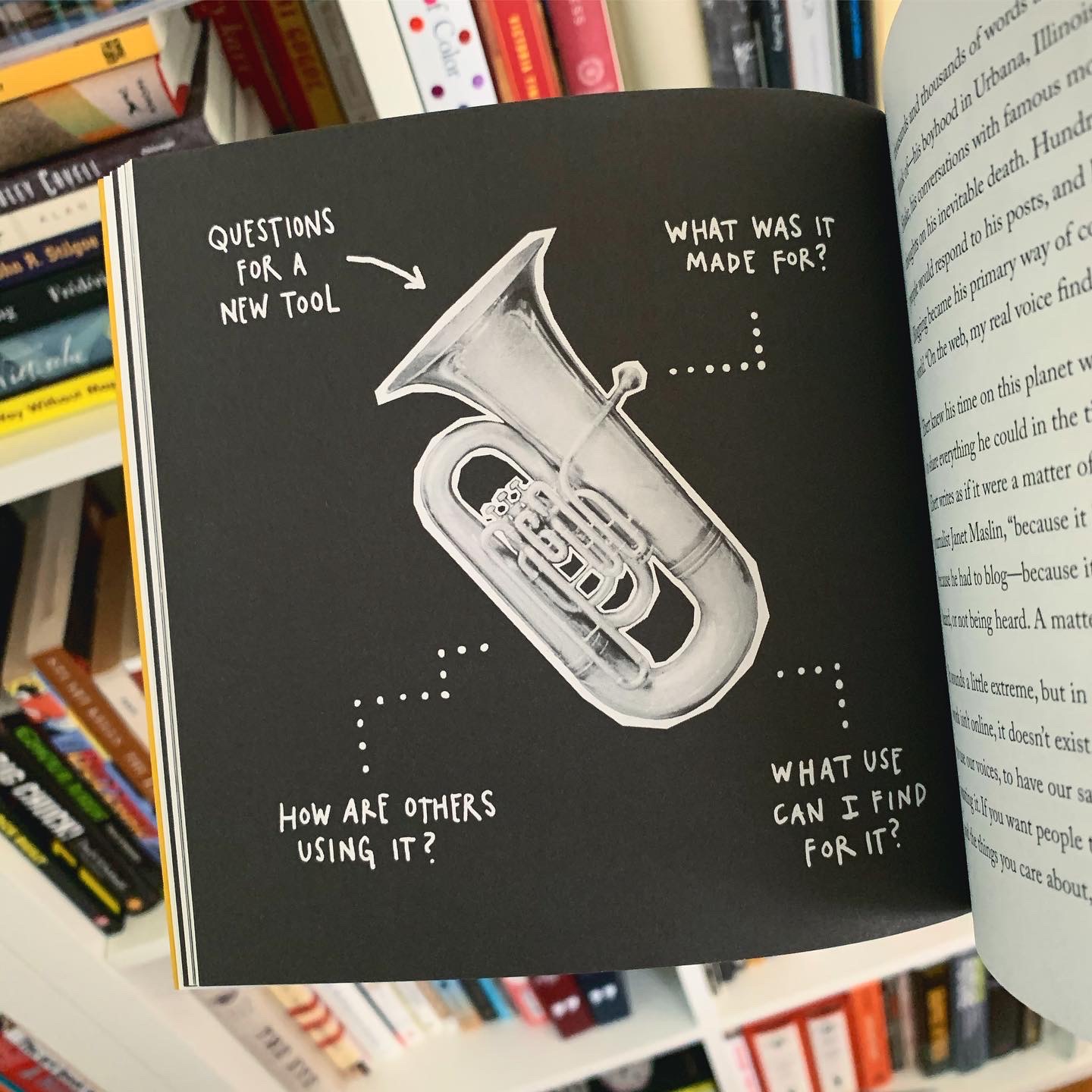

I first ran across Stephanie Mills' compilation of "78 Questions to Ask of Any Technology" more than a decade ago. My search for it online this week turned up this blog from artist Austin Kleon. He didn't cite the source I was seeking, but thanks to him, I'm now also aware of Neil Postman's shorter list of questions, as well as lists created by Wendell Berry and L. M. Sacasas.

I appreciate Kleon's thoughts as a working artist, especially about the reciprocal relationship we have with tools:

"Knowing what’s worth spending your time and attention on is half the game in life, and, for better or worse, and probably because I’m privileged enough to hustle a little less than I used to, I tend these days to err on the side of technologies that have been around for hundreds of years, technologies like paper and pencils.

The fact of the matter is, McLuhan was right: 'We shape our tools and then our tools shape us.'” Austin Kleon

I'd recommend checking out what Kleon shares in his blog:

Here's the list Kleon cites from Postman:

1. What is the problem to which technology claims to be a solution?

2. Whose problem is it?

3. What new problems will be created because of solving an old one?

4. Which people and institutions will be most harmed?

5. What changes in language are being promoted?

6. What shifts in economic and political power are likely to result?

7. What alternative media might be made from a technology?

When I followed Kleon's links to Sacasas, I found several thoughtful essays on ethics and technology that are well-worth reading. His judgment that the "worst case malevolent uses are not the only kinds of morally significant aspects of our technology worth our consideration" also resonates. His 41 questions are laid out here:

In another essay, Sacasas argues that any discussion of ethics is already complicated by human subjectivity, and an existing bias in certain cultural circles toward the myth of technological neutrality:

"We should not forget that ours is an ethically diverse society and simply noting that technology is ethically fraught does not immediately resolve the question of whose ethical vision should guide the design, development, and deployment of new technology. Indeed, this is one of the reasons we are invested in the myth of technology’s neutrality in the first place: it promises an escape from the messiness of living with competing ethical frameworks and accounts of human flourishing." Sacasas ( https://thefrailestthing.com/2017/11/06/one-does-not-simply-add-ethics-to-technology/)

Granted, asking questions does not necessarily free us from these problematic "presets." But it can help us try to think in more breadth and depth about our relationships with technologies of all sorts.

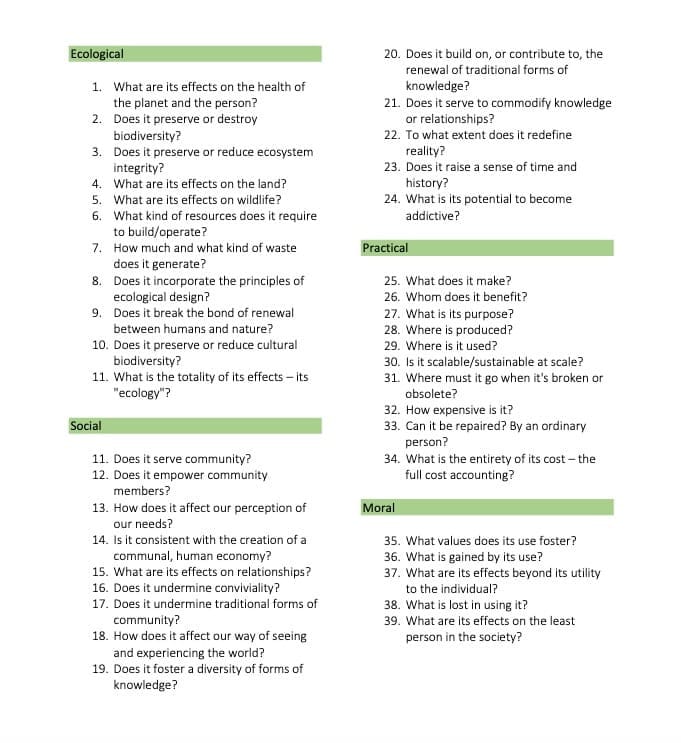

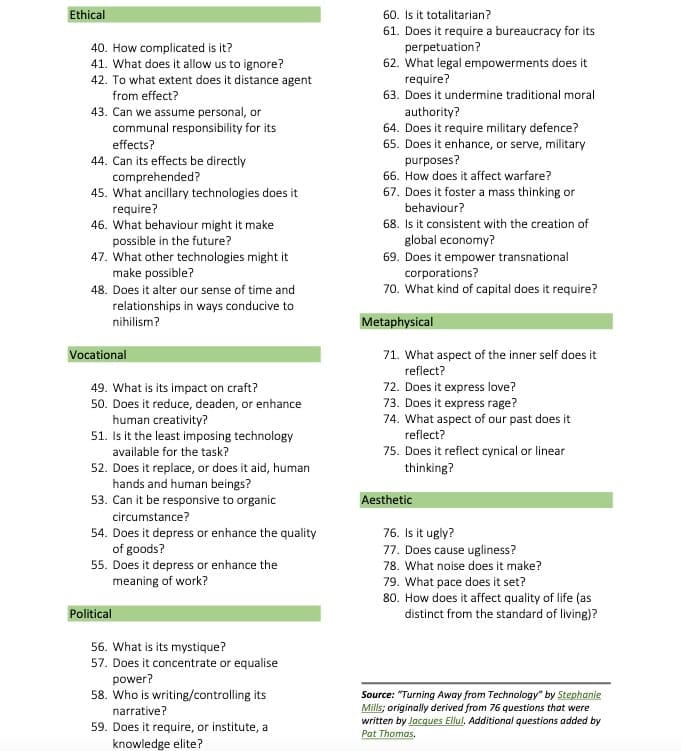

I haven't reproduced his list here, because I think many of his questions are subsumed in that original document that I was seeking. Here's the most updated version, now shared as "80 Questions to Ask About Any Technology" from Howl at the Moon. Here's the pdf version of the screenshots below:

https://howlatthemoon.org.uk/wp-content/uploads/2022/01/80-Reasonable-Questions_HATM.pdf

I'd love it if you'd spend some time with it and let me know your thoughts.