Human Agency and Machine Thinking

"Still, AI doesn't have to help us save the world to be a socially legitimate technology. As long as the objectives and outputs of AI models are transparent and contestable, their benefits, costs, and risks justly distributed, and their environmental footprints justifiable, AI systems have a place in a sustainable future." Shannon Vallor, AI Mirror: How to Reclaim Our Humanity in an Age of Machine Thinking

Toward the end of her book, philosopher and AI ethicist Shannon Vallor gives us this concise list of the necessary conditions for AI to play a constructive role. The "as long as" part of this sentence is the crux. Even without having read the two hundred pages leading up to this, we can predict her next statement: "But today these conditions are almost never met . . ." (216).

This is where the "reclaiming" in her book's subtitle comes in. Because aside from the actual capacities of the technology itself, what our future might look like is dependent upon humans making decisions.

If leaders and corporations don't work to meet these conditions, she warns, a backlash will arise: "the social license for AI to operate will eventually be withdrawn by publics who increasingly see little personal advantage from enduring the status quo, having less and less to lose. That outcome is likely to be messy and destructive for everyone."

To create the necessary social legitimacy, Vallor argues we need "better systems of technology governance to reset the innovation ecosystem's incentives." She's encouraging us to exert our agency—"grab the wheel"—while it is still possible, saying, "we're not passengers on this ride, with AI driving the bus and us just hoping it takes us somewhere nice."

I agree that we urgently need to pay attention, so that we do not "willingly sleepwalk through the process of reconstituting the conditions of human existence" (she's quoting here from technology philosopher Langdon Winner). We need to take an active role in making sure this technology has fair, working guardrails.

Waking up and getting sufficiently focused and activated may be difficult, though, because so many people do feel like helpless passengers at this point. Lots of us are confused about what AI even is; feel uncertain about what forms may be valid and helpful; and are even ignorant of the extent to which AI is already affecting our lives. And we've been subjected to large helpings of hype.

Perhaps some have been in a state of what eco-theologian Thomas Berry called "technological entrancement," because of the convenience it has seemed to offer. Others have become steeped in a kind of learned helplessness, naively coming to regard the tech-savvy as being light-years ahead of us in intellect and therefore deserving of their power. Vallor also contends that much in our cultural history and conditioning has pushed us into a "crisis of self-forgetting and loss of confidence in human potential"—prompting defeated mindsets that are then exacerbated by AI mirrors.

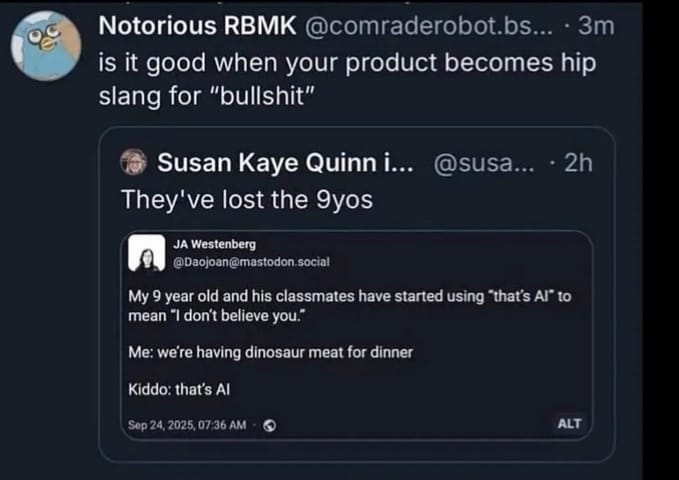

Meanwhile, at least from my anecdotal observations, the kind of backlash Vallor is concerned about could be building. A year ago, I overheard one of my twenty-something kids muttering to their phone, "F***-off AI, you bitch-ass, plagiarist robot!" Last month that one permanently signed out of a language learning app because, they said, it had become too "AI forward."

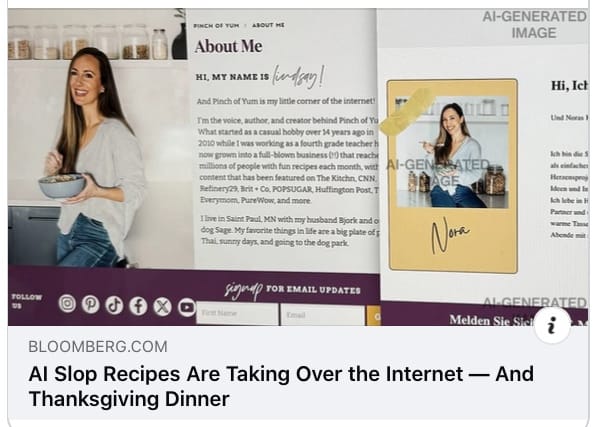

Just in the last few weeks, I've randomly come across these posts on social media:

Lots of us are likely cobbling together a sense of what's going on with AI from piecemeal sources such as news snippets, family members, various discussion threads on our favored platforms, and whatever our personal experiences with it have been. Here's an unscientific sampling of things I've heard lately from people in the real world: one friend's husband tells her to "ask AI about everything," while another friend doesn't even know what Grok is. Another friend works with adult clients who tell her they ask AI for relationship and even spiritual advice. My other twenty-something kid finds it isn't at all helpful for complex questions in their field and is concerned about it "hallucinating," but thinks they might find a use for it in a specific lab scenario.

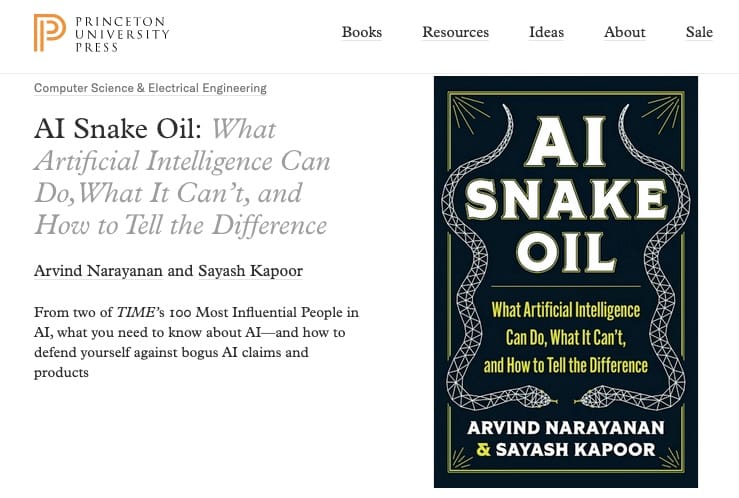

My own knowledge about it has been patchy, which is why I've been starting to look for more in-depth books. If you have recommended reading, please let me know. My academic background is steering me first toward peer-reviewed publications from university presses, such as Vallor's book from Oxford, and this one that I'm just starting:

The first step in claiming our agency is to find out more about what, exactly, is going on with AI. I'll be discussing my readings more as we go along, but if you'd like a softer intro to either of these books, you can look at this interview with Shannon Vallor,

or check out the AI Snake Oil authors' site here:

In closing, I'd like to know what your questions and impressions, uses or abuses around AI might be? How much do you feel you know about it, and where/how have you learned that? Please feel free to comment below—let's get a conversation going!